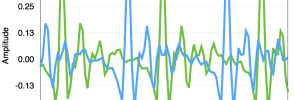

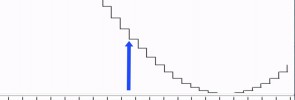

When we say that a signal is non-stationary we mean that its properties, such as the spectrum, change over time. To analyse signals like this, we need to first assume that these properties do not change over some short period of time, called the frame. We can then analyse individual frames of the signal, one at a time – we perform a short-term analysis. When extracting a frame, its important to apply a window with tapered edges, to remove discontinuities at the start and end of the frame. Here we see why that is, and what would happen if we forgot to apply a tapered window.

Try it for yourself – here are the materials to download:

- A frame of speech extracted from a larger waveform: framed

- The same frame of speech, after a tapered window has been applied: framed_windowed